Mitigating LWLock Contention in PostgreSQL: Optimizing Fast-Path Locking, Partition Pruning, and Index Strategy for High-Concurrency Workloads

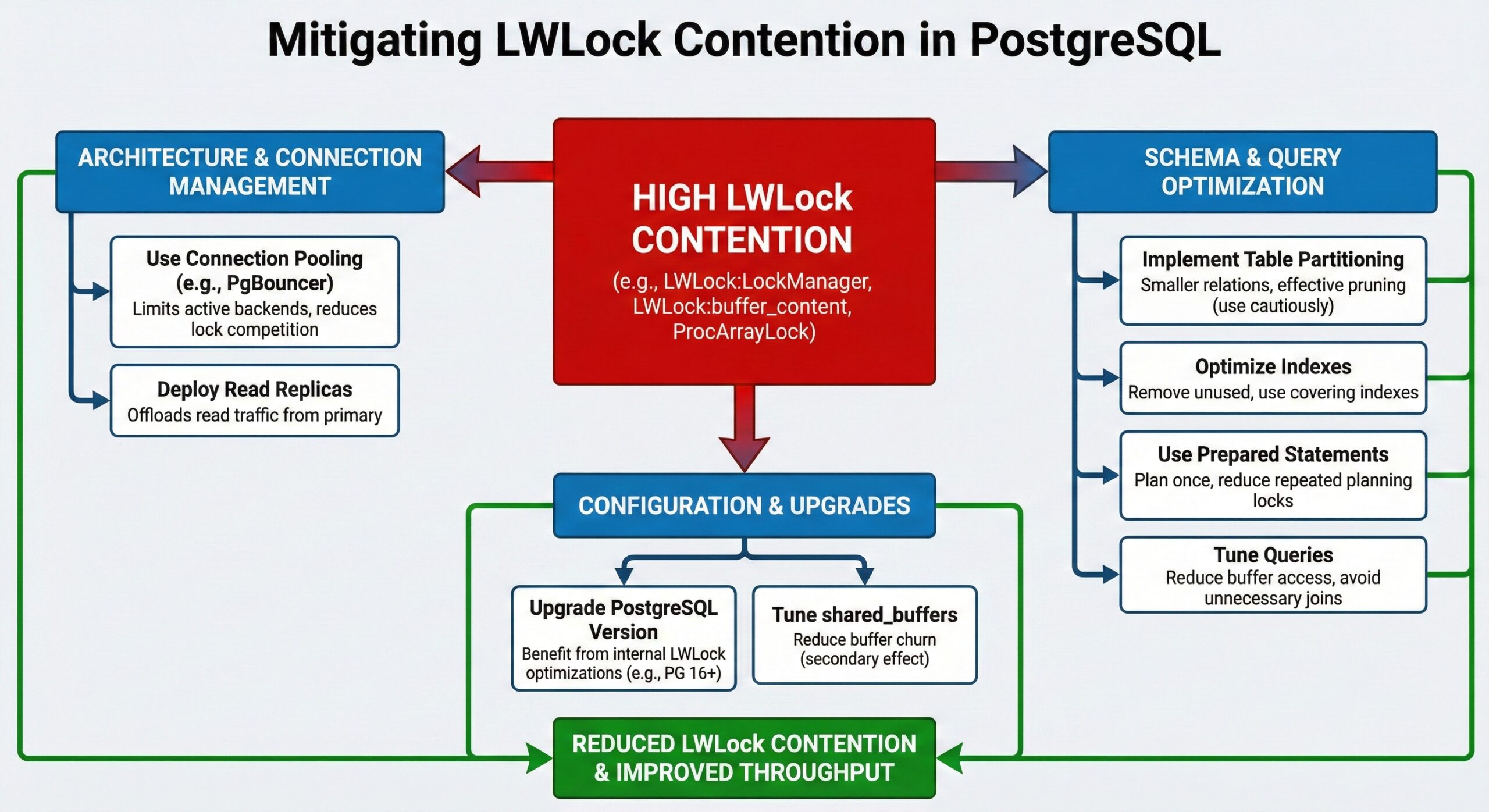

Improving PostgreSQL performance under lock manager contention necessitates a detailed understanding of Lightweight Locks and fast-path locking behavior, followed by the application of targeted tuning strategies—such as partition pruning, index optimization, join simplification, and monitoring—structured as problem-symptom-solution with comprehensive technical keyword coverage.

LWLock Contention in PostgreSQL

PostgreSQL employs Lightweight Locks to coordinate access to shared memory structures, including the buffer pool, WAL subsystem, and the lock manager itself. These lightweight synchronization primitives are not user-visible locks (like row or table locks), but internal spinlocks protecting critical sections in shared memory. Contention arises when multiple backend processes repeatedly attempt to acquire the same Lightweight Lock, leading to spinning, increased CPU utilization, and degraded throughput. In high-concurrency, read-intensive environments—particularly on cloud-managed instances such as Amazon Aurora PostgreSQL—this often manifests as significant wait events under LWLock:LockManager, even in workloads composed entirely of SELECT statements.

Understanding Lightweight Lock dynamics is crucial for optimizing performance.

Fast-Path vs. Slow-Path Locking Mechanism

To minimize Lightweight Lock overhead, PostgreSQL implements a fast-path locking mechanism. Each backend maintains a local array of 16 fast-path lock slots, capable of storing weak lock modes such as AccessShareLock, RowShareLock, and RowExclusiveLock on relations and their indexes. As long as a session acquires no more than 16 distinct relation locks and does not require stronger lock modes (e.g., ExclusiveLock), lock management occurs entirely in local memory, avoiding contention on shared data structures and the associated partitioned Lightweight Locks that protect them. Once the 16-relation threshold is exceeded, PostgreSQL promotes the session’s lock set to the shared lock hash table, requiring acquisition of partition-level Lightweight Locks in the lock manager. This transition to the slow path significantly increases the probability of LWLock:LockManager contention under high concurrency.

Why Read-Only Queries Contribute to Lock Contention

Despite being read-only, queries in modern OLTP schemas often access numerous relations due to table partitioning, extensive indexing, and complex join topologies. A single SELECT may access a partitioned parent table, multiple child partitions, the primary key index (typically a B-tree), and several secondary indexes—all of which require AccessShareLock acquisition. Under concurrent execution, sessions exhaust their fast-path slots, forcing lock entries into the shared hash table. This results in a large number of AccessShareLock records contending for the same set of partitioned Lightweight Locks in the lock manager, creating a scalability bottleneck .

Efficiently managing Lightweight Lock contention is vital for system stability.

Partition Pruning as a Performance Optimization

Partition pruning reduces the number of partitions and associated indexes a query must lock by eliminating irrelevant partitions at query plan time. This directly decreases the per-session lock footprint and increases the likelihood that all required locks fit within the 16 fast-path slots. Empirical testing on a monthly-partitioned orders table demonstrated that unpruned queries—scanning all partitions—resulted in mixed fast-path and slow-path locking and significant LWLock:LockManager wait times. In contrast, pruned queries targeting specific partitions remained entirely within the fast path, enabling substantially higher throughput and better scalability .

Benchmark Results Demonstrating Tuning Impact

Performance benchmarks using pgbench with 100 clients and 10 worker threads on a partitioned workload revealed substantial differences. An unpruned SELECT count(*) FROM orders achieved approximately 42 million transactions over 15 minutes (~46K TPS), with roughly one-third of the database load attributable to LWLock:LockManager waits. After rewriting the workload to enable effective partition pruning—such as using PL/pgSQL to inject runtime constants visible to the query planner—throughput increased to about 53 million transactions in the same duration (~59K TPS). Concurrently, LWLock:LockManager waits diminished to negligible levels, shifting the bottleneck to CPU utilization and indicating optimal lock manager efficiency .

Overhead of Excessive Indexing

Even on non-partitioned tables, a high number of B-tree indexes increases lock acquisition overhead. For a simple point lookup, PostgreSQL must acquire an AccessShareLock on the table, its primary key index, and any additional indexes that might be consulted during planning or execution. In a test case involving an items table with 20 B-tree indexes, a single index-only scan on the name column required 22 relation locks. This exceeded the 16 fast-path slots, spilling 6 locks into the shared lock hash table. Under high concurrency, this inefficiency compounds, leading to severe LWLock:LockManager contention .

Mitigating Index Bloat and Redundancy

Operationally, unused or rarely accessed indexes contribute to planning overhead, increased catalog contention, and elevated internal locking pressure. Regular review of index usage via pg_stat_user_indexes is critical in high-transaction environments. Eliminating redundant composite indexes and dropping unused structures reduces the average lock footprint per query, preserves fast-path capacity across backends, and decreases contention on the shared lock hash table partitions during peak load periods .

Lock Footprint of Multi-Join Queries

Complex schemas, such as those in e-commerce applications involving users, carts, cart_items, items, orders, and payments, exacerbate lock contention. A single multi-join SELECT can access dozens of relations and indexes. Experiments with a six-way join showed that even read-only cart or order status queries exceeded the 16-relation threshold, spilling locks into the shared hash table and triggering LWLock:LockManager waits. This underscores the importance of lock-aware query design, where join complexity is minimized or structured to reduce the number of concurrently accessed relations.

Practical Strategies for Reducing Lock Manager Contention

To maximize fast-path utilization and minimize LWLock contention, database architects should:

- Design partitioned tables with query predicates that enable compile-time or runtime partition pruning (e.g., using stable expressions or runtime constants).

- Avoid patterns such as CTEs that mask filter conditions from the planner, preventing effective pruning.

- Conduct regular index audits to remove unused or redundant indexes.

- Simplify join logic where feasible to reduce the number of relations accessed per transaction.

- Monitor pg_locks and wait events (e.g., LWLock:LockManager) proactively.

- Leverage monitoring tools such as Amazon CloudWatch and RDS Database Insights to detect early signs of lock manager saturation.

Early detection and mitigation of lock manager contention prevent throughput ceilings in CPU-bound PostgreSQL workloads.